Evolutionary Algorithms

Evolutionary computation is a branch of artificial intelligence that draws inspiration from natural selection and evolutionary biology to solve complex problems. This approach employs algorithms based on the mechanics of biological evolution, such as reproduction, mutation, recombination, and selection.

Neural Computing

Connectionist models represent a cornerstone of modern artificial intelligence and machine learning. These models draw inspiration from the biological neural system, particularly that of the brain and its interconnected network of neurons. By mimicking this network architecture, connectionist models consist of layers of artificial neurons or nodes that process and transmit signals to one another.

Distributed Computing

Distributed computing is a model where a computer task is divided across multiple systems or machines, which communicate and coordinate their efforts to complete the task more efficiently than a single device could alone. This approach leverages the power of several computers to process large datasets, handle complex computations, or serve a high number of requests simultaneously.

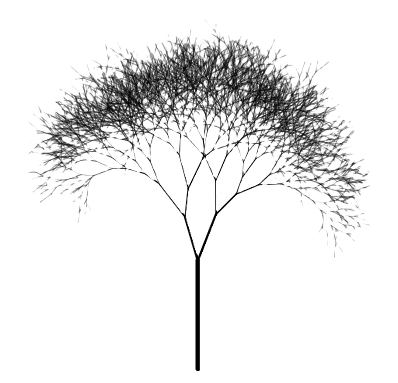

Lindenmayer Systems

Lindenmayer Systems, or L-systems, are a mathematical modeling method used to simulate the growth processes of plants and other organisms. These systems operate on strings of symbols that represent various parts of a plant, such as leaves, branches, and flowers. L-systems use iterative rules or productions to replace each symbol in a string with a longer sequence of symbols, modeling developmental steps in organism growth. This process, repeated many times, generates intricate patterns that can closely mimic the complex structures found in nature.

Process Simulation

Process simulation, in a broader context, is the use of computer models to replicate and analyze the dynamics of any process or system. This technique transcends physical systems to include business processes, software operations, and logistical workflows, among others. By employing algorithms that mimic the operation of these processes, simulation allows stakeholders to observe outcomes under different scenarios without implementing real-world changes.

Corpus Linguistics

Utilising corpus linguistics for investigating old and archival text data extracted from the nascent phases of internet networks. This field utilizes computer-assisted methods to examine and interpret patterns, structures, and the frequency of linguistic elements within these large datasets.